After the churn analysis of the Environment Agency press releases (please read that article for more details and important caveats if you haven't read one of my churn posts before), I followed up with DEFRA.I will be making the raw data publicly available tomorrow for both the Environment Agency and DEFRA churn analyses.

This time I was able to construct the spiders and process the data faster - I also avoided (most of) the unicode problems that plagued me with the EA data, so this analysis can be considered slightly more accurate and slightly less forgiving of the media organisations (though it still strictly follows the rules I set down previously, such as no editing of the press release to remove extraneous information). Along with inevitable issues with some difficult characters slipping through and no editing of the press releases, it still means the data will naturally favour the media organisations. As I said on previous posts, when I make the raw data publicly available, churn analysis by other people will very likely improve upon my methods and yield results more detrimental for the media.

In any case - onto the results. The summary is presented below (click for full size image):

|

| "Quality" press churn results |

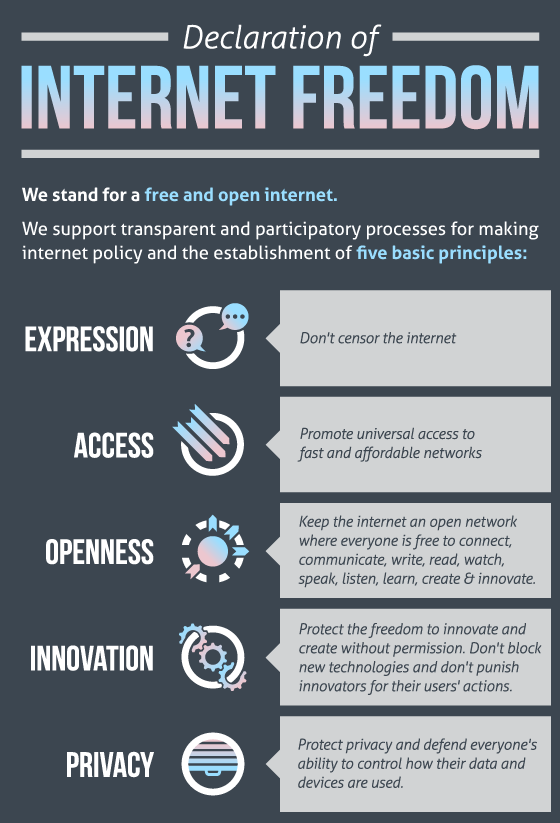

Summary of results:

- A total of 386 press releases were analysed, from 13th May 2010 to 24th November 2011. These generated 1959 detectable cases of churn. Again, there is probably a lot of interesting data within the "detectable" category that deserves analysis at a later date. For now it is discarded.

- Out of those, 173 were classified as "significant" and 18 as "major".

- The Guardian was the leader in both categories by a long way - accounting for 19.65% of significant churn and 27.78% of major churn.

- The BBC followed close behind in terms of significant churn with 16.18%, though for major churn was beaten into third place by both the Independent and the Daily Mail with a joint 16.67%.

- The Independent came third in the signifcant churn classification.

- A common factor in the most highly churned articles both in this analysis and the previous two appears to be lack of a named author in most cases (though see one of the exceptions detailed below). This suggests the media organisations are aware that what they are doing is not kosher.

- Continuing a theme from the last two churn analyses, the tabloids consistently embarrass the so called "quality press". This time I pulled out the statistics for the UK's major tabloids for comparison (click for full size image):

|

| Tabloid press churn results |

As usual I select a few of the more egregarious cases of churn for your entertainment (and importantly - provide a manual submission to the churnalism database so they can be seen visually):

'Gloucestershire Old Spots pork protected by Europe'

An absolutely cracking BBC 79% cut and paste job on - er - crackling.

'Bonfire of the Quangos'

Remember that list of Quangos that were to go? Completely cut and pasted from a press release. This one is particularly fascinating because in the two worst cases the cut and paste was the list provided in the press release. It actually included several paragraphs laying out a context that was not cut and pasted across. If it had just been the list in the original both would have scored close to 100% pastes.....

The pastes are so large in any case that the churnalism engine falls over when the 'view' button is clicked to see the visualised version. Be warned if you click it, your browser may hang.

'New service for householders to stop unwanted advertising mail'

Absolute carnage on the churning front here with the majority of the main media outlets represented. The Guardian appeared to like this story so much they cut and pasted it twice - and this time each article has a named author. Where the hell was the editor?